Enhancing Your Fleet with a Machine Vision System

Machine vision may sound like the technology in a futuristic sci-fi movie, like HAL in “2001: A Space Odyssey” or “Auto,” the artificial intelligence autopilot in Pixar Animation Studio’s “WALL-E.”

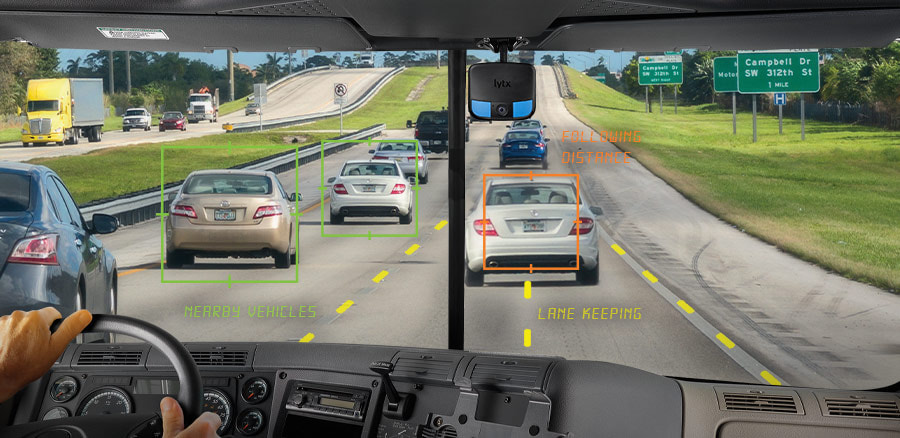

In fact, machine vision is already deployed in more advanced areas of technology all around us, woven into the operations of major industries — from transportation and logistics to food and pharmaceuticals. Lytx, for example, gives you the option to detect lane departure, following distance, rolling stops, and critical distance, among other things.

Already, the market for machine vision technology is roughly $8.8 billion in 2017 and is set to surpass $18.2 billion annually by 2025, according to estimates from Grand View Research. The rapid growth is largely fueled by strong demand from the automotive and food & beverage sectors. Those industries see machine learning as an integral part of their future toolkits for such applications as navigation, manufacturing, quality assurance, service confirmation, and passenger, pedestrian and driver safety.

But what exactly is machine vision? And how does it affect fleet operations?

“Simply put, machine vision uses image and video data to analyze and make predictions,” said Stephen Krotosky, manager of applied machine learning at Lytx.

Stephen Krotosky - manager of applied machine learning, Lytx

At a basic level, machine vision could be harnessed to detect objects, such as cell phones, safety cones, or hardhats. It could also help detect the presence of passengers, or even the lack of passengers to gauge occupancy in buses or other transport vehicles.

It does this by going through a “training” process where the system is fed hundreds of thousands of images that have been manually annotated by humans describing what each image contains. The system gradually “learns” what a cell phone or a cigarette looks like so it can accurately detect those items in new images.

On a more advanced level, machine vision algorithms can be “taught” to better interpret what it sees, as well as what it doesn’t directly see, using artificial intelligence to take a step further to draw conclusions or calculate risk.

“Typically, this approach means making use of the latest advances in deep learning,” Krotosky.

But a machine vision model is only as smart as the data used to train it. To create an accurate model that can deal with all the variations that the real world can throw at it, you’d need millions of unique images with all the situations imaginable. Lytx draws from a cache of images of more than 100 billion miles driven over the past 20 years in dozens of vehicle types, across all 50 states, in all weather conditions, and over numerous road types. For Lytx’s researchers this rich cache of image data gives them the best available raw material to create machine vision models that’s specifically tailored to commercial vehicle travel.

As anyone in the business knows, however, the vast majority of miles driven by the nation’s commercial fleet is safe and free of incidents. The trick is in being able to recognize the critical moments that generate risk—the brief phone call, the five minutes taken to eat a sandwich or smoke a cigarette, the driver who fails to fasten their seatbelt. Catching those moments accurately is a lot like looking for a needle in a haystack. Machine vision is about sifting through mountains of data to find those brief but critical moments of risk. That neat trick, called anomaly detection, is the subject of our next article in the series on Machine Vision and Artificial Intelligence.