Lytx’s MV+AI Technology Explained – How the DriveCam Event Recorder Detects Risks

The Machine Vision and Artificial Intelligence Technology Behind Lytx “Triggers”

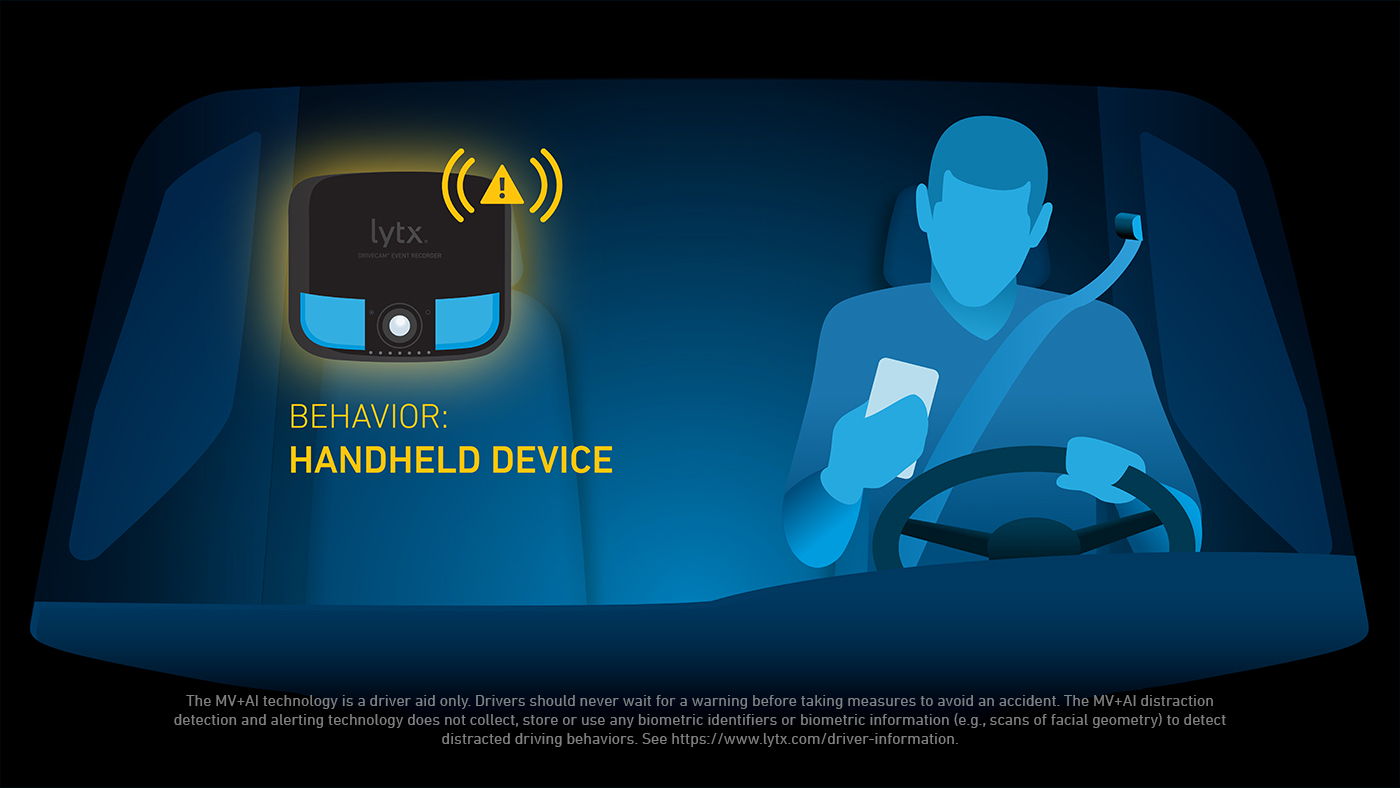

The innovative DriveCam® event recorder helps improve fleet safety by detecting risky driving behaviors on the road and in the vehicle. The device’s advanced machine vision and artificial intelligence (MV+AI) technology analyzes driver behavior and nearby vehicles to determine how a driver is performing relative to their surroundings. Specific driver behaviors such as lane departure, food and drink, smoking, following too closely, or texting while driving, trigger the DriveCam event recorder to flag and “trigger” the event. Drivers are alerted to their risky behavior with real-time multilingual audio and light alerts† to help their self-correct. Alerts are delivered only when there is high confidence in order to minimize irrelevant alerts and help prevent alert fatigue.

What Are the Benefits of MV+AI Technology?

Lytx®’s MV+AI technology helps fleets by providing an expanded view of risk. The insights from MV+AI can reveal if a driver needs to work on correcting a specific behavior or if certain risky behaviors are appearing among all drivers. If this is the case, an organization-wide initiative to improve safety may need to be implemented.

How the MV+AI Technology Learns

Lytx’s MV+AI technology uses information from several sensors at once to identify risky situations and driving behaviors. Machine vision can see and recognize objects and behavior by analyzing images and video data. AI interprets and learns from those images and video data to determine the likelihood that a particular event or behavior occurred. The combination of information from video and other sensors in and around the vehicle, provides information to help the artificial intelligence learn—similar to the way our brains rely on information from each or our senses to understand what is happening around us.

Not just any data can be used to train the AI to identify and categorize risky driving behaviors. The data must come from a large database. It also must be validated. The most accurate validation comes from human reviewers. Here’s why:

Human experts help surface important nuances that help the system learn faster and interpret more complex scenarios. For example, AI technology might detect a lane departure and trigger a video event clip. We know that lane departure is highly correlated with distracted driving such as texting. However, a human reviewer might notice that this swerve was due to a construction area forcing all traffic to make a sudden lane change. This type of nuanced data is important in helping to refine, learn, anticipate what might happen next and become more sophisticated along the way. Lytx events have been reviewed by humans for over 10 years.

[Related: Solutions to Texting and Driving]

How Do MV+AI Triggers Work?

Machine Vision recognizes an object through the DriveCam event recorder’s lens, and AI uses that information, along with other data like speed, GPS to determine if that combination of information is risky.

For example, when a vehicle approaches a stop sign, machine-vision technology scans the sign and recognizes it. The system also uses speed data to detect whether the driver came to a complete stop. If the accelerometer detects that the vehicle didn't come to a complete stop the DriveCam event recorder system triggers a video clip.

Here’s a short list of just a few of the over 100+ behaviors the DriveCam event recorder can currently detect on the road using a combination of MV+AI and human review.

Lane Departure:

Rolling Stop:

Following Distance:

Critical Distance:

Driver-Facing Lens Detects Distraction

Lytx’s MV + AI technology is trained to recognize objects and actions that indicate distracted driving behavior such as lane departure, eating and drinking, smoking, or failing to wear a seatbelt. The driver-facing camera captures and analyzes images that allow the machine vision technology to recognize an object such as a cigarette or a cell phone. The AI is trained to categorize the cell phone, cigarette, food, drink, or seat belt use as risky.

Even if the object in question is hidden from view, the AI can detect behaviors such as looking down repeatedly to determine reliably that a driver is texting, for example. Importantly, risky distracted driving behaviors tend to happen together. Almost 1 in 4 drivers who are engaging in one risky behavior are actually doing more than one at the same time.

Why Lytx MV+AI Technology?

Lytx's MV+AI technology is developed from insights from the largest driving database of its kind with more than 311 billion miles of commercial driving data. Our AI models are trained by more than 1 million hours of video and enhanced by human review for improved accuracy and precision. The technology is sophisticated enough to monitor and assess multiple in-cab risks at the same time, while also filtering out the noise to detect, record, and analyze relevant risks and distractions, minimizing unnecessary alerts. All of these factors are what makes Lytx MV+AI technolgy one of the most advanced solutions on the market. Soon Lytx will be rolling out road-view MV+AI Gen 2, with new features including pedestrian alerts and traffic light detection.

Interested in learning more? See how your team can benefit from this revolutionary technology. Contact us or book a demo, today!